CML with DVC

In many ML projects, data isn't stored in a Git repository and needs to be downloaded from external sources. DVC is a common way to bring data to your CML runner. DVC also lets you run pipelines and plot changes in metrics for inclusion in CML reports.

The .github/workflows/cml.yaml file to create this report is:

name: CML & DVC

on: [push]

jobs:

train-and-report:

runs-on: ubuntu-latest

# container: docker://ghcr.io/iterative/cml:0-dvc2-base1

steps:

- uses: actions/checkout@v3

- uses: actions/setup-python@v4

with:

python-version: '3.x'

- uses: iterative/setup-cml@v1

- uses: iterative/setup-dvc@v1

- name: Train model

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

run: |

pip install -r requirements.txt # Install dependencies

dvc pull data --run-cache # Pull data & run-cache from S3

dvc repro # Reproduce pipeline

- name: Create CML report

env:

REPO_TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

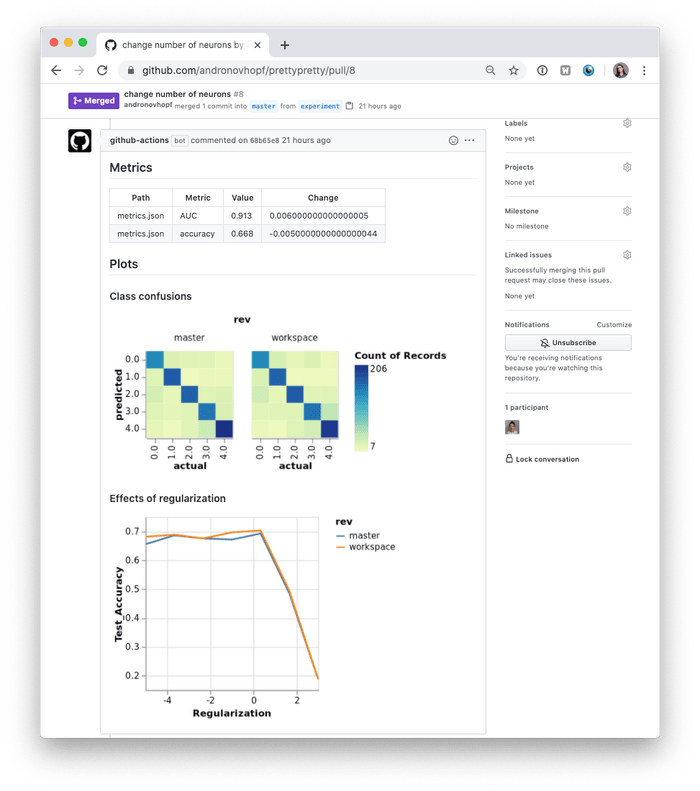

echo "## Metrics: workflow vs. main" >> report.md

git fetch --depth=1 origin main:main

dvc metrics diff master --show-md >> report.md

echo "## Plots" >> report.md

echo "### Class confusions" >> report.md

dvc plots diff \

--target classes.csv \

--template confusion \

-x actual \

-y predicted \

--show-vega master > vega.json

vl2png vega.json -s 1.5 > plot.png

echo '' >> report.md

echo "### Effects of regularization" >> report.md

dvc plots diff \

--target estimators.csv \

-x Regularization \

--show-vega master > vega.json

vl2png vega.json -s 1.5 > plot-diff.png

echo '' >> report.md

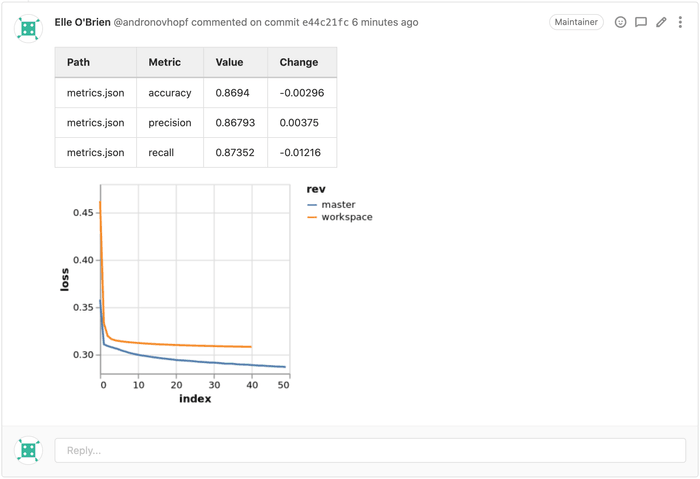

echo "### Training loss" >> report.md

dvc plots diff \

--target loss.csv --show-vega main > vega.json

vl2png vega.json > plot-loss.png

echo '' >> report.md

cml comment create report.mdSee the example repository for more, or check out the use cases for machine learning.

GitHub Actions: setup-dvc

The iterative/setup-dvc action

installs DVC (similar to what setup-cml does

for CML).

This action works on Ubuntu, macOS, and Windows runners. When running on Windows, Python 3 should be setup first.

steps:

- uses: actions/checkout@v3

- uses: iterative/setup-dvc@v1runs-on: windows-latest

steps:

- uses: actions/checkout@v3

- uses: actions/setup-python@v4

with:

python-version: '3.x'

- uses: iterative/setup-dvc@v1A specific DVC version can be installed using the version argument (defaults

to the latest release).

- uses: iterative/setup-dvc@v1

with:

version: '1.0.1'The .gitlab-ci.yml file to create this report is:

train-and-report:

image: iterativeai/cml:0-dvc2-base1 # Python, DVC, & CML pre-installed

script:

- pip install -r requirements.txt # Install dependencies

- dvc pull data --run-cache # Pull data & run-cache from S3

- dvc repro # Reproduce pipeline

# Create CML report

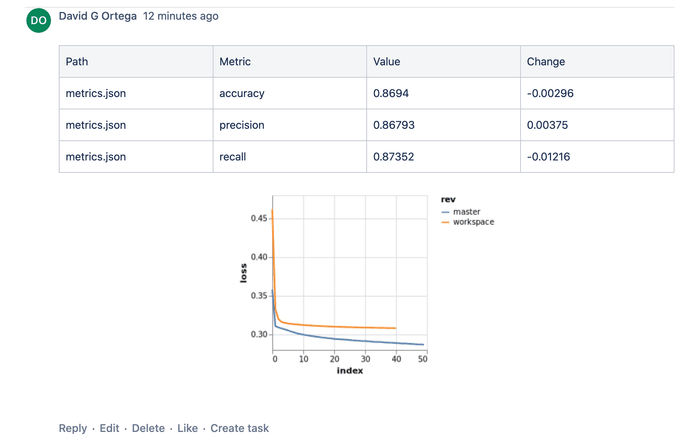

- echo "## Metrics: workflow vs. main" >> report.md

- git fetch --depth=1 origin main:main

- dvc metrics diff --show-md main >> report.md

- echo "## Plots" >> report.md

- echo "### Training loss function diff" >> report.md

- dvc plots diff --target loss.csv --show-vega main > vega.json

- vl2png vega.json > plot.png

- echo '' >> report.md

- cml comment create report.mdSee the example repository for more, or check out the use cases for machine learning.

The bitbucket-pipelines.yml file to create this report is:

image: iterativeai/cml:0-dvc2-base1 # Python, DVC, & CML pre-installed

pipelines:

default:

- step:

name: Train model

script:

- pip install -r requirements.txt # Install dependencies

- dvc pull data --run-cache # Pull data & run-cache from S3

- dvc repro # Reproduce pipeline

- step:

name: Create CML report

script:

- echo "## Metrics: workflow vs. main" >> report.md

- git fetch --depth=1 origin main:main

- dvc metrics diff --show-md main >> report.md

- echo "## Plots" >> report.md

- echo "### Training loss function diff" >> report.md

- dvc plots diff --target loss.csv --show-vega main > vega.json

- vl2png vega.json > plot.png

- echo '' >> report.md

- cml comment create report.mdCloud Storage Provider Credentials

There are many supported could storage providers. Authentication credentials can be provided via environment variables. Here are a few examples for some of the most frequently used providers:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_SESSION_TOKEN(optional)

AZURE_STORAGE_CONNECTION_STRINGAZURE_STORAGE_CONTAINER_NAME

OSS_BUCKETOSS_ACCESS_KEY_IDOSS_ACCESS_KEY_SECRETOSS_ENDPOINT

GOOGLE_APPLICATION_CREDENTIALS: the path to a service account JSON file

GDRIVE_CREDENTIALS_DATA: the contents of a service account JSON file. See how to setup a Google Drive DVC remote for more information.

Runner Access Permissions

When using object storage remotes (like AWS s3 or GCP gs) with

cml runner, DVC can be granted fine-grained

access. Instead of resorting to dedicated credentials & managing additional

keys,

the --cloud-permission-set option

provides granular control.

Networking cost and transfer time can also be reduced using an appropriate

--cloud-region. For example, AWS has

free network transfers from a DVC remote

s3 to a CML runner ec2 instance within the same region.

$ cml runner launch \

--cloud=aws \

--cloud-region=us-west \

--cloud-type=m+t4 \

--cloud-permission-set=arn:aws:iam::1234567890:instance-profile/dvc-s3-access \

--labels=cml-gpu$ cml runner launch \

--cloud=gcp \

--cloud-region=us-west \

--cloud-type=m+t4 \

--cloud-permission-set=dvc-sa@myproject.iam.gserviceaccount.com,scopes=storage-rw \

--labels=cml-gpu